|

Annotating Texts in Educational Web-Based System Maroš Unčík Bachelor thesis project supervised by prof. Mária Bieliková |

Motivation

The important aspect of any learning materials are questions, which summarize the key facts of the education materials and allow students to actively use their knowledge. There are some approaches to automatic extract relevant questions, but the quality of the extract questions is still low. Creating questions by an expert is tedious, extremely time-consuming and from the expert view it is often complicated to specify the difficulty of questions as well as to choose relevant questions.

Method

We proposed the method of collaborative creating questions which consists of creating, adding and evaluating questions. We use peer reviewing with the explicit and implicit feedback from students, but also the final evaluation by an expert (a teacher). For this purpose we designed a student rating model and question rating model. Both models are based on evaluation of factors. Each factor has its own weight and its value was determined experimentally.

The user rating model determine four factors:

- Question creating,

- Answering to questions,

- Explicit rating of questions,

- Similar rating.

The question rating model is used to quantify ability level of adding questions for particular student. It also uses the question rating model, which is determined with four factors:

- Explicit rating of the question,

- Count of answers,

- Count of "I don't know",

- Count of mistakes.

The models evaluate and count factors with appropriate weight. The question rating model rates all questions. Treshold in the proposed models was determined to be in the subset of approximately 20% of the best rated questions.

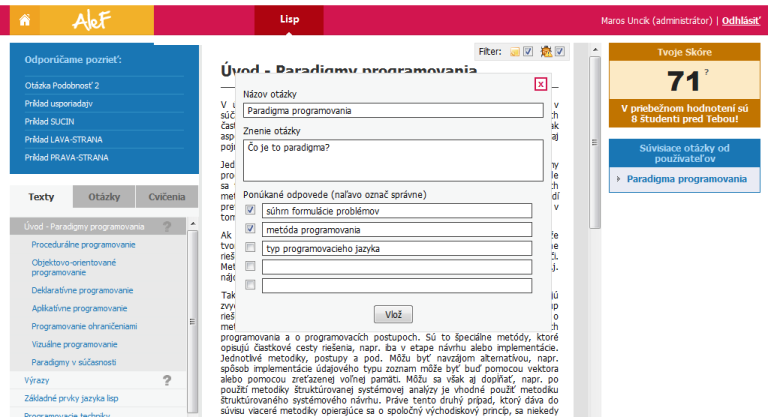

An important element in proposed method is the motivation of students. For the motivation we use a simple game principle. Students get points that they can see and compare with their peers. Their task is to find a tactic that brings them as much points as possible. Thus, we motivate students to pursue activities in relation to questions, as well as we motivate students to perform these activities as their best.

Evaluation

For evaluation of proposed method, we experimented in domain of Functional and logic programming. We developed software compontents, which are a part of ALEF framework. The software components allow students to create and answer the questions. We also developed a component for motivation of the students. We allow students to add four types of questions: single choice questions, multi choice questions, sorter questions and text complement questions. The students create and add questions similarly as the annotations are added in similar systems. Firstly, they select text to bind question, then they select type of questions and provide the question. The question is permanently added and bounded to the place where it was created. Other students can answer and rate the questions of their peers.

Experiment

We performed uncontrolled experiment in the course of Functional and Logic Programming at Faculty of Informatics and Information Technologies. Students added questions to the learning materials, their peers answered, rated and labeled faulty questions in the set of added questions. Thus, we obtained 88 students’ questions. We have manually rated all questions with one of three grades. Then we compared the overlap of the best manually rated questions with the best rated question by the question rating model. We determined the overlap for 56.65%. The experiment and its result is described in more detail in the publications below.

Publications

- Šimko, M., Barla, M., Michlík, P., Labaj, M., Mihál, M., Unčík, M

- ALEF: Learning and Collaboration in Web 2.0. In Proc. in Informatics and Information Technology Student Research Conference IIT.SRC 2010, pp. 138-145.

- Unčík, M., Bieliková, M.

- Annotating Educational Content by Questions Created by Learners. In Proc. of SMAP 2010. IEEE Signal Processing Society. pp. 13-18.

- Unčík, M.

- Annotating Texts In Educational Web-Based System. Bachelor thesis, Slovak University of Technology in Bratislava 2010. 63p.

pdf (in Slovak)

pdf (in Slovak)

- Unčík, M.

- Annotating Texts in Educational Web-Based System In Proc. in Informatics and Information Technology Student Research Conference IIT.SRC 2010, pp. 27-34.

| to Homepage | to Teaching | to the Top |

|

||

|

||